URP效果实现,从基础慢慢深入

介绍

之前学习过入门精要,之后就很少接触了,现在接触URP,再学习一遍入门精要也顺便学习下HLSL,

主要是对着链接中的URP HLSL入门学习进行学习,会有一定自己的扩展

基础光照模型

基础公式

- Lambert: max(0,dot(L,N))

- HalfLambert: max(0,dot(L,N)) * 0.5 + 0.5

- Phong: pow(max(0,dot(reflect(-L,N), V)), Gloss)

- BlinnPhong: pow(max(0,dot(normalize(L+V), N)), Gloss)

代码

Lambert / HalfLambert

/ HalfLambert 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70Shader "Unlit/Lambert"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_BaseColor ("BaseColor", Color) = (1,1,1,1)

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _BaseColor;

CBUFFER_END

struct Attributes

{

float4 positionOS:POSITION;

float4 normalOS:NORMAL;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

float3 normalWS:TEXCOORD1;

};

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = TRANSFORM_TEX(i.uv, _MainTex);

o.normalWS = TransformObjectToWorldNormal(i.normalOS.xyz, true);

return o;

}

float4 Frag(Varyings i) :SV_Target{

Light mylight = GetMainLight();

real4 LightColor = real4(mylight.color, 1);

float3 lightDir = normalize(mylight.direction);

float lambert = dot(normalize(i.normalWS), lightDir);

float halfLambert = lambert * 0.5f + 0.5f;

float4 color = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

return color * _BaseColor * halfLambert * LightColor;

}

ENDHLSL

Pass

{

Tags{ "LightMode" = "UniversalForward" }

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

}

}Phong / BlinnPhong

/ BlinnPhong 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78Shader "Unlit/Phong"

{

Properties

{

_MainTex("Texture", 2D) = "white" {}

_GlossColor("BaseColor", Color) = (1,1,1,1)

_Gloss("Gloss", Range(1, 256)) = 1

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _GlossColor;

float _Gloss;

CBUFFER_END

struct Attributes

{

float4 positionOS:POSITION;

float4 normalOS:NORMAL;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

float3 normalWS:TEXCOORD1;

float3 viewDirWS:TEXCOORD2;

};

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = TRANSFORM_TEX(i.uv, _MainTex);

o.viewDirWS = normalize(_WorldSpaceCameraPos.xyz - TransformObjectToWorld(i.positionOS.xyz));//得到世界空间的视图方向

o.normalWS = TransformObjectToWorldNormal(i.normalOS.xyz, true);

return o;

}

float4 Frag(Varyings i) :SV_Target{

Light mylight = GetMainLight();

real4 LightColor = real4(mylight.color, 1);

float3 lightDir = normalize(mylight.direction);

float3 viewDir = normalize(i.viewDirWS);

float3 worldNormal = normalize(i.normalWS);

float phong = pow(max(dot(reflect(-lightDir, worldNormal), viewDir), 0), _Gloss);

float blinnPhong = pow(max(dot(normalize(lightDir + viewDir), worldNormal), 0), _Gloss);

float4 col = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

float4 diffuse = LightColor * col * max(dot(lightDir, worldNormal), 0);

float4 specular = _GlossColor * blinnPhong * LightColor;

return float4(specular.rgb + diffuse.rgb, col.a);

}

ENDHLSL

Pass

{

Tags{ "LightMode" = "UniversalForward" }

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

}

}

总结

- TransformObjectToHClip

- TransformObjectToWorld

- TransformObjectToWorldNormal

- _WorldSpaceCameraPos

- 光照信息: #include “Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl”

- SubShader{HLSLINCLUDE … ENDHLSL} Pass{HLSLPROGRAM … ENDHLSL} (第一次写Pass中写成HLSLEINCLUDE了,没报错效果又一直是错的,注意了!!!)

法线贴图

基础简介

我们采用在世界坐标系下,在片元着色器中进行计算。

定义顶点着色器拿到数据的结构体,我们需要顶点位置,uv,顶点法线,顶点切线获得世界坐标系下的:顶点位置,法线,切线,副切线

计算副切线时,叉乘法线,切线,并在乘切线的w值判断正负,在乘负奇数缩放影响因子。1

o.BtangentWS= cross(o.normal.xyz,o.tangent.xyz) * i.tangent.w;

片元处理中采样法线贴图,得切线空间法线,在将其转换到世界空间

1

2

3

4

5float3x3 T2W = {i.tangentWS.xyz,i.BtangentWS.xyz,i.normalWS.xyz};

float4 norTex = SAMPLE_TEXTURE2D(_NormalTex, sampler_NormalTex, i.uv);

float3 nomralTS = UnpackNormalScale(norTex, _NormalScale);

normalTS.z=pow((1-pow(normalTS.x,2)-pow(normalTS.y,2)),0.5); //规范化法线 不影响x,y情况下规范化z轴

float3 normalWS = normalize(mul(normalTS,T2W));使用带入法线贴图计算后的法线用于后续计算即可。

代码

1 | Shader "Unlit/Normal" |

总结

- 规范化向量一般用normalize,在不想影响xy轴情况下可以使用勾股定理自己计算另一个轴的值

- UnpackNormal,UnpackNormalScale

- mul(), 矩阵相乘

- 为了节省空间,可以将一些值藏在部分多余参数中,比如这次代码中,将世界空间坐标xyz分别写在切线,副切线,法线的w值上

渐变纹理

基础思路

不适用常规的模型uv,而是使用lambert/halflambert的值作为x轴或y轴,对渐变纹理图进行采样

代码

1 | Shader "Unlit/Ramp" |

AlphaTest

基础简介

使用clip,在片元着色器对裁剪一些像素

代码

1 | Shader "Unlit/AlphaTest" |

总结

- clip(), 参数小于0则裁剪

- step(a,b) 等价于 a <= b ? 1 : 0, 用于优化shader代码中的if,else

AlphaBlend

基础简介

- 关闭深度写入

- 渲染队列,渲染类型设置成Transparent 透明的

- 设置Blend

代码

1 | Shader "Unlit/AlphaBlend" |

总结

- “IgnoreProjector” = “True”, 忽视

多光源

基础

- 首先需要获取多光源,通过GetAdditionalLightsCount(),GetAdditionalLight(index, positionWS)两个函数处理多光源

- 将主光源计算后的颜色,叠加所有叠加光源颜色输出

代码

1 | Shader "Unlit/MulLight" |

总结

- shader枚举开关:

[KeywordEnum(ON,OFF)]_ADD_LIGHT(“AddLight”,float) = 1 //定义shader中的枚举 只有ON,OFF两个选项

#pragma shader_feature _ADD_LIGHT_ON _ADD_LIGHT_OFF //定义shader_feature 规则:参数名_枚举名 (需要把所有定义的选项都放进去)

#if _ADD_LIGHT_ON … #endif 使用

阴影投射和接收

基础

投射

使用官方写好的阴影投射Pass UsePass “Universal Render Pipeline/Lit/ShadowCaster”

使用官方写好的 不支持SRP Batcher, 因此自己写阴影投射Pass

参考”Packages/com.unity.render-pipelines.universal/Shaders/ShadowCasterPass.hlsl”接收

TransformWorldToShadowCoord(i.positionWS) //获得shadowcoord

GetMainLight(shadowcoord).shadowAttenuation //获得阴影值

#pragma multi_compile _ _MAIN_LIGHT_SHADOWS //开启阴影

#pragma multi_compile _ _MAIN_LIGHT_SHADOWS_CASCADE //级联阴影

#pragma multi_compile _ _SHADOWS_SOFT //柔化阴影,得到软阴影额外光源阴影接收

代码

主光源阴影接收Shader

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81Shader "Unlit/Shadow"

{

Properties

{

_MainTex("Texture", 2D) = "white" {}

_GlossColor("BaseColor", Color) = (1,1,1,1)

_Gloss("Gloss", Range(1, 256)) = 1

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _GlossColor;

float _Gloss;

CBUFFER_END

struct Attributes

{

float4 positionOS:POSITION;

float4 normalOS:NORMAL;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

float3 normalWS:TEXCOORD1;

float3 positionWS:TEXCOORD2;

};

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = TRANSFORM_TEX(i.uv, _MainTex);

o.positionWS = TransformObjectToWorld(i.positionOS.xyz);

o.normalWS = TransformObjectToWorldNormal(i.normalOS.xyz, true);

return o;

}

float4 Frag(Varyings i) :SV_Target{

Light mylight = GetMainLight(TransformWorldToShadowCoord(i.positionWS));

real4 LightColor = real4(mylight.color, 1);

float3 lightDir = normalize(mylight.direction);

float3 viewDir = normalize(_WorldSpaceCameraPos.xyz - i.positionWS);

float3 worldNormal = normalize(i.normalWS);

float phong = pow(max(dot(reflect(-lightDir, worldNormal), viewDir), 0), _Gloss);

float blinnPhong = pow(max(dot(normalize(lightDir + viewDir), worldNormal), 0), _Gloss);

float4 col = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

float4 diffuse = LightColor * col * max(dot(lightDir, worldNormal), 0) * mylight.shadowAttenuation;

float4 specular = _GlossColor * blinnPhong * LightColor * mylight.shadowAttenuation;

return float4(specular.rgb + diffuse.rgb, col.a);

}

ENDHLSL

Pass

{

Tags{ "LightMode" = "UniversalForward" }

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

#pragma multi_compile _ _MAIN_LIGHT_SHADOWS //开启阴影

#pragma multi_compile _ _MAIN_LIGHT_SHADOWS_CASCADE //级联阴影

#pragma multi_compile _ _SHADOWS_SOFT //柔化阴影,得到软阴影

ENDHLSL

}

UsePass "Universal Render Pipeline/Lit/ShadowCaster"

}

}额外光源阴影接收Shader

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20// AddLight

float4 addLightColor = float4(0, 0, 0, 1);

#if _ADD_LIGHT_ON

#if defined(SHADOWS_SHADOWMASK) && defined(LIGHTMAP_ON)

half4 shadowMask = inputData.shadowMask;

#elif !defined (LIGHTMAP_ON)

half4 shadowMask = unity_ProbesOcclusion;

#else

half4 shadowMask = half4(1, 1, 1, 1);

#endif

int lightCount = GetAdditionalLightsCount();

for (int index = 0; index < lightCount; index++)

{

Light light = GetAdditionalLight(index, i.positionWS, shadowMask);

addLightColor += (dot(normalWS, normalize(light.direction)) * 0.5f + 0.5f)

* real4(light.color, 1) * light.distanceAttenuation * light.shadowAttenuation;

}

#endif主光源投射阴影

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37//参考 "Packages/com.unity.render-pipelines.universal/Shaders/ShadowCasterPass.hlsl"

Pass

{

Name "ShadowCaster"

Tags{"LightMode" = "ShadowCaster"}

ZWrite On

ZTest LEqual

ColorMask 0

HLSLPROGRAM

#pragma vertex VertShadowCaster

#pragma fragment FragShadowCaster

Varyings VertShadowCaster(Attributes i)

{

Varyings o;

o.uv = TRANSFORM_TEX(i.uv, _MainTex);

float3 positionWS = TransformObjectToWorld(i.positionOS.xyz);

float3 normalWS = TransformObjectToWorldNormal(i.normalOS.xyz, true);

Light light = GetMainLight();

o.positionHS = TransformWorldToHClip(ApplyShadowBias(positionWS, normalWS, light.direction.xyz));

#if UNITY_REVERSED_Z

o.positionHS.z = min(o.positionHS.z, o.positionHS.w * UNITY_NEAR_CLIP_VALUE);

#else

o.positionHS.z = max(o.positionHS.z, o.positionHS.w * UNITY_NEAR_CLIP_VALUE);

#endif

return o;

}

half4 FragShadowCaster(Varyings i) :SV_Target

{

return 0;

}

ENDHLSL

}

总结

- 查看源码思路,最终需要得到阴影值,从Light中看到shadowAttenuation是我们需要的

通过 GetMainLight(shadowcoord) 或者 GetMainLight(float4 shadowCoord, float3 positionWS, half4 shadowMask) 获得的阴影会被赋值

调用了Shadow.hlsl中的 MainLightRealtimeShadow 以及 MainLightShadow

查看其中判断的宏可以发现需要 MAIN_LIGHT_CALCULATE_SHADOWS, 全局搜索发现开启 _MAIN_LIGHT_SHADOWS 后会定义MAIN_LIGHT_CALCULATE_SHADOWS

函数网里面跟进可以看到_MAIN_LIGHT_SHADOWS_CASCADE _SHADOWS_SOFT 还有其他一些宏,看使用情况开启即可

GetMainLight需要参数 shadowcoord, 在Shadow.hlsl 查找shadowcoord 可以找到几个函数,

再看参数和调用地方,可以确认TransformWorldToShadowCoord函数我们可以直接使用

- 初次看URP源码,先记录下在初次使用时寻找关键函数的思路

序列帧

基础

- 将一张序列帧图片分块,按块采样显示,间隔一定时间切换下一块

代码

1 | Shader "Unlit/SequenceFrame" |

总结

- frac函数:取小数,frac(x) = x - (int)x;

- _Time获取变化时间

- 图片左下角为(0,0)点,因此y轴需要反转一下

广告牌

基础

实现效果:正方向始终朝向相机

思路:顶点着色器变换顶点坐标,使得渲染出来的模型朝向相机

- 将所有操作都放在模型空间,使用模型空间坐标作为锚点,则锚点为(0,0,0)

- 根据顶点坐标的(x,y,z)重新计算顶点坐标 pos = center + right * x + up * y + z * fwd;

- 需要得出right, up, fwd,fwd为朝向相机的方向,通过相机朝向可以推出,需要求right和up,

假设我们的广告牌正方向都朝上则up = (0, 1, 0), right = cross(up, fwd)

再重新计算up = cross(fwd, right) 得到up,right,fwd, 将计算结果作为模型空间顶点坐标进行其他计算即可

代码

1 | Shader "Unlit/ADS" |

总结

- 广告牌核心思想就是做顶点变换

- 使用cross叉乘得垂直向量,unity中的叉乘使用左手法则

玻璃效果

基础

首先需要抓屏,Build-in中通过grab pass或者传入RT,

URP中_CameraColorTexture得到当前屏幕同等分辨率的图像,它在opaque模型和skybox渲染完成之后抓取

通过:TEXTURE2D(_CameraColorTexture);SAMPLER(sampler_CameraColorTexture);获取

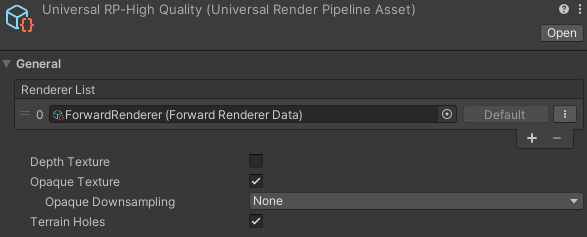

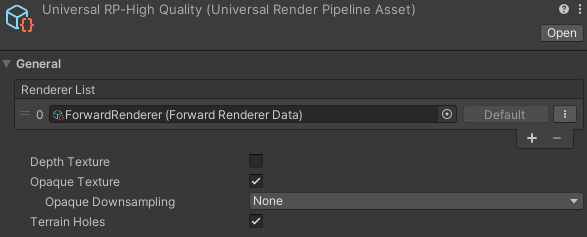

使用_CameraColorTexture必须设置中打开Opaque Texture选项

采样屏幕图像需要屏幕坐标:ComputeScreenPos(positionCS);

i.screenPos.xy / i.screenPos.w; //获取屏幕UV,需要做齐次除法应用法线,对采样点进行偏移,可以使用世界空间法线或者切线空间法线

世界空间的法线由世界空间确定,会随着模型的旋转而变化;

切线空间的法线不随着模型的旋转而变换;由于_CameraColorTextrue在Opaque之后,但在Transparent之前,因此透明物体无法显示

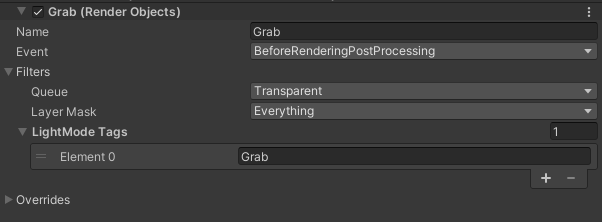

因此可以利用RenderFeature,Render Objects设置一个”LightMode”=”Grab”,在透明物体之后执行的透明队列

代码

1 | Shader "Unlit/Glass" |

总结

使用ShaderVariablesFunctions.hlsl中的通用函数:

GetVertexPositionInputs(positionOS),GetVertexNormalInputs(normalOS, tangentOS)

快速计算法线坐标变换以及法线_CameraColorTexture只在运行时生效

_TextureName_TexelSize:图片的宽高,这个声明在CBuffer外

x = 1.0/width

y = 1.0/height

z = width

w = height_TextureName_ST:图片的Tilling 和 Offset

x,y 对应 Tilling的 x,y

z,w 对应 Offset的 x,y利用RenderFeature设置特殊的渲染方式

屏幕深度,护盾特效

基础

获取屏幕深度图 _CameraDepthTexture;

TEXUTRE2D(_CameraDepthTexture); SAMPLER(sampler_CameraDepthTexture);

通过屏幕坐标采样:ComputeScreenPos(positionCS); 别忘了使用时齐次除法,这步操作通常再原片着色器Linear01Depth(depth, _ZBufferParams) 获取0-1线性深度

URPSetting中需要打开深度图

护盾特效:需实现菲尼尔效果,扫光效果

菲尼尔效果 基础公式:F0 + (1 - F0) * pow(1.0 - dot(viewDirWS, normalWS), 5.0);

F0为材质的菲尼尔系数

通用沿y轴扫光效果:float flow=saturate(pow(1-abs(frac(i.positionWS.y0.3-_Time.y0.2)-0.5),10)0.3);

float4 flowcolor=flow_emissioncolor;

代码

1 | Shader "Unlit/DepthShield" |

总结

- _ZBufferParams: UnityInput.hlsl 官方链接

// x = 1-far/near

// y = far/near

// z = x/far

// w = y/far

#if UNITY_REVERSED_Z

// x = -1+far/near

// y = 1

// z = x/far

// w = 1/far

特定物体描边效果

链接

urp管线的自学hlsl之路 第二十五篇 Render Feature制作特定模型外描边

基础

整理一下描边过程:

获得基础纯色图:按层级/渲染队列,过滤出需要渲染的物体,返回纯色

对纯色图进行模糊操作

纯色模糊图 - 纯色图:得到外描边图

实际上模糊图 - 纯色图,内部边缘也会有一段负数渐变区,

将其显示出来相当于内描边,取绝对值abs,可以得到内外描边效果图

将描边图与原图叠加输出

代码

基础纯色图:

绘制之前需要设置一下输出目标:ConfigureTarget(temp);

最终是要将物体绘制出来:context.DrawRenderers(renderingData.cullResults, ref draw, ref filter);

设置FilteringSettings filter = new FilteringSettings(queue, layer);

设置DrawingSettings draw = CreateDrawingSettings(shaderTag, ref renderingData, renderingData.cameraData.defaultOpaqueSortFlags);1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49//第一个pass 绘制纯色的图像

class DrawSoildColorPass : ScriptableRenderPass

{

Setting mysetting = null;

OutlineRenderFeather SelectOutline = null;

ShaderTagId shaderTag = new ShaderTagId("DepthOnly");//只有在这个标签LightMode对应的shader才会被绘制

FilteringSettings filter;

public DrawSoildColorPass(Setting setting, OutlineRenderFeather render)

{

mysetting = setting;

SelectOutline = render;

renderPassEvent = setting.passEvent;

//过滤设定

RenderQueueRange queue = new RenderQueueRange();

queue.lowerBound = Mathf.Min(setting.QueueMax, setting.QueueMin);

queue.upperBound = Mathf.Max(setting.QueueMax, setting.QueueMin);

filter = new FilteringSettings(queue, setting.layer);

}

public override void Configure(CommandBuffer cmd, RenderTextureDescriptor cameraTextureDescriptor)

{

int temp = Shader.PropertyToID("_MyTempColor1");

RenderTextureDescriptor desc = cameraTextureDescriptor;

cmd.GetTemporaryRT(temp, desc);

SelectOutline.solidcolorID = temp;

ConfigureTarget(temp); //设置它的输出RT

ConfigureClear(ClearFlag.All, Color.black);

}

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

mysetting.mat.SetColor("_SoildColor", mysetting.color);

CommandBuffer cmd = CommandBufferPool.Get("提取固有色pass");

//绘制设定

var draw = CreateDrawingSettings(shaderTag, ref renderingData, renderingData.cameraData.defaultOpaqueSortFlags);

draw.overrideMaterial = mysetting.mat;

draw.overrideMaterialPassIndex = 0;

//开始绘制(准备好了绘制设定和过滤设定)

context.DrawRenderers(renderingData.cullResults, ref draw, ref filter);

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

}

}获取原图

renderer.cameraColorTarget;1

2

3

4

5

6

7

8

9

10public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

if(setting.mat != null)

{

RenderTargetIdentifier sour = renderer.cameraColorTarget; //原图

renderer.EnqueuePass(_DrawSoildColorPass);

_DrawBlurPass.Setup(sour);

renderer.EnqueuePass(_DrawBlurPass);

}

}模糊图

拿到基础纯色图,进行模糊即可,模糊操作可以参考

高品质后处理:十种图像模糊算法的总结与实现

后处理效果汇总

1 | class DrawBlurPass : ScriptableRenderPass |

- 合并图像

两个Pass都比较简单,不多说明了1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98Shader "Unlit/Outline"

{

Properties

{

_MainTex("Texture", 2D) = "white" {}

_SoildColor("SoildColor",Color) = (1,1,1,1)

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

TEXTURE2D(_SourTex);

SAMPLER(sampler_SourTex);

TEXTURE2D(_BlurTex);

SAMPLER(sampler_BlurTex);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _SoildColor;

CBUFFER_END

struct Attributes

{

float4 positionOS:POSITION;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

};

ENDHLSL

// Pass 0 纯色

Pass

{

Tags{ "LightMode" = "UniversalForward" }

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = TRANSFORM_TEX(i.uv, _MainTex);

return o;

}

float4 Frag(Varyings i) :SV_Target{

return _SoildColor;

}

ENDHLSL

}

// Pass 1 合并图像

Pass

{

Tags{ "LightMode" = "UniversalForward" }

HLSLPROGRAM

#pragma vertex Vert1

#pragma fragment Frag1

#pragma multi_compile_local _INCOLORON _INCOLOROFF

Varyings Vert1(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = TRANSFORM_TEX(i.uv, _MainTex);

return o;

}

float4 Frag1(Varyings i) :SV_Target{

float4 blur = SAMPLE_TEXTURE2D(_BlurTex, sampler_BlurTex, i.uv);

float4 sour = SAMPLE_TEXTURE2D(_SourTex, sampler_SourTex, i.uv);

float4 soild = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

real4 color;

#if _INCOLORON

color = abs(blur - soild) + sour;

#elif _INCOLOROFF

color = saturate(blur - soild) + sour;

#endif

return color;

}

ENDHLSL

}

}

}

总结

- 获取基础纯色图的过程,是一个基础过滤物体到渲染的过程,可以参考URP源码RenderObjectsPass.cs

边缘检测描边

基础

参考Unity Shader入门精要,12.3,13.4章节

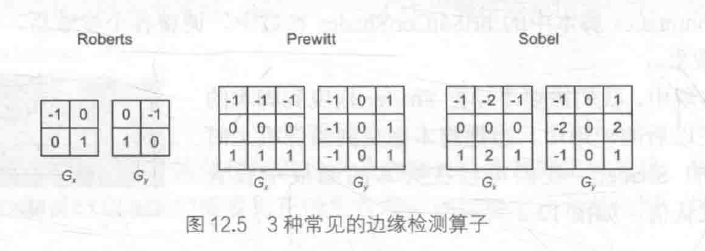

- 基础边缘检测

卷积:通常为22,33的方形区域,每个格子对应一个权重值,

采样一个像素点时,对其周围方形空间采样按权重值叠加后再除以个数得到当前像素值常见的边缘检测算子:Roberts,Prewitt,Sobel等

得到两个方向的梯度值,Gx,Gy

G = sqrt(GxGx,GyGy); 通常优化开方: G = abs(Gx) + abs(Gy)

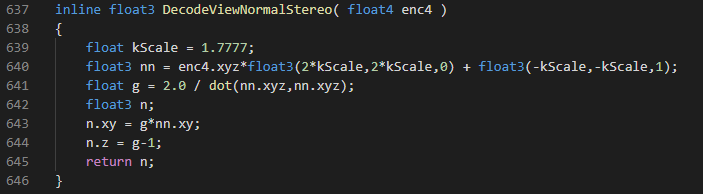

G 值表示梯度值,梯度值越大表示越在边缘检测结果分析

这种方式检测,会产生很多我们不希望的边缘线,如光照影响的,法线影响的,阴影影响的等

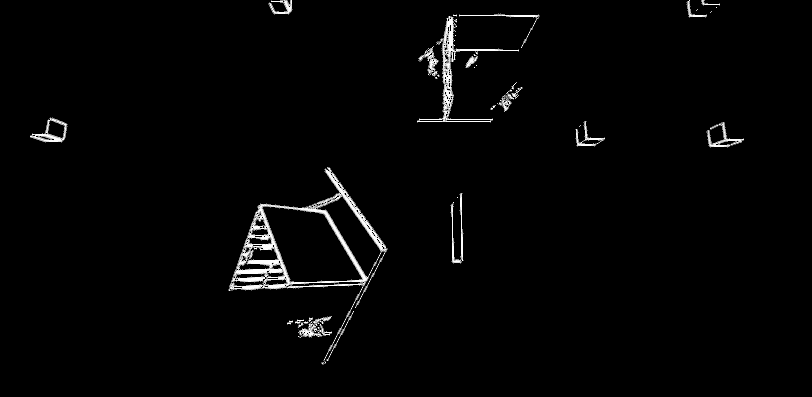

- 通过深度和深度法线上进行边缘检测

需要获取深度图,以及深度法线图,通过_CameraDepthTexture可以获得深度图,但是URP不支持深度法线图

因此需要获得深度法线图:自定义RenderFeather,在不透明物体渲染之前使用”Hidden/Internal-DepthNormalsTexture”渲染一次,将图片存为”_CameraDepthNormalsTexture”_CameraDepthNormalsTexture.xyz存法线信息,_CameraDepthNormalsTexture.w存深度信息,

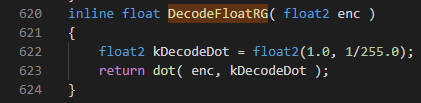

UnityCG.cginc中定义: DecodeFloatRG 解码深度:线性深度 = z + w/255

_CameraDepthNormalsTexutre.xyz法线信息并非真实法线,需要对其进行解码操作获得观察空间法线

UnityCG.cginc中定义:DecodeViewNormalStereo

镜像对比方式:如像素(x,y+1)与(x,y-1),(x+1,y)与(x-1,y)

比较法线以及深度是否相同,相同返回1,不同返回0,代码里对应CheckSame函数,小于一定范围则视为相同检测结果分析

可以明显看到这种方式比第一种方式减少了很多不必要的边缘线

代码

基础边缘检测

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104Shader "Unlit/OutlinePPS"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_EdgeColor ("EdgeColor", Color) = (1,1,1,1)

_EdgeOnly ("EdgeOnly",Range(0,1)) = 1

_BackgroundColor ("BackgroundColor", Color) = (1,1,1,1)

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

Cull Off ZWrite Off ZTest Always

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _MainTex_TexelSize;

float4 _EdgeColor;

float4 _BackgroundColor;

float _EdgeOnly;

CBUFFER_END

struct Attributes

{

float4 positionOS:POSITION;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv[9] : TEXCOORD0;

};

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

// 3*3区域uv

o.uv[0] = i.uv + _MainTex_TexelSize.xy * (-1, -1);

o.uv[1] = i.uv + _MainTex_TexelSize.xy * (0, -1);

o.uv[2] = i.uv + _MainTex_TexelSize.xy * (1, -1);

o.uv[3] = i.uv + _MainTex_TexelSize.xy * (-1, 0);

o.uv[4] = i.uv + _MainTex_TexelSize.xy * (0, 0);

o.uv[5] = i.uv + _MainTex_TexelSize.xy * (1, 0);

o.uv[6] = i.uv + _MainTex_TexelSize.xy * (-1, 1);

o.uv[7] = i.uv + _MainTex_TexelSize.xy * (0, 1);

o.uv[8] = i.uv + _MainTex_TexelSize.xy * (1, 1);

return o;

}

float luminance(float3 color)

{

return 0.2125*color.r + 0.7154*color.g + 0.0721*color.b;

}

// 主要用于描边检测

float sobel(Varyings i)//定义索伯检测函数

{

const float Gx[9] = { -1,-2,-1,0,0,0,1,2,1 };

const float Gy[9] = { -1,0,1,-2,0,2,-1,0,1 };

float texColor = 0;

float edgeX = 0;

float edgeY = 0;

for (int it = 0; it < 9; it++)

{

texColor = luminance(SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv[it]));

edgeX += texColor * Gx[it];

edgeY += texColor * Gy[it];

}

return 1 - abs(edgeX) - abs(edgeY);

}

float4 Frag(Varyings i) :SV_Target{

float edge = sobel(i);

float4 color = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv[4]);

float4 color1 = lerp(_EdgeColor, color, edge);

float4 color2 = lerp(_EdgeColor, _BackgroundColor, edge);

return lerp(color1, color2, _EdgeOnly);

}

ENDHLSL

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

}

}通过深度和深度法线边缘检测

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139Shader "Unlit/OutlinePPSDepth"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_EdgeColor ("EdgeColor", Color) = (1,1,1,1)

_EdgeOnly ("EdgeOnly",Range(0,1)) = 1

_BackgroundColor ("BackgroundColor", Color) = (1,1,1,1)

_SampleDistance("SampleDistance",Range(0,1)) = 1

_SensitivityDepth ("SensitivityDepth",Range(0,3)) = 1

_SensitivityNormals ("SensitivityNormals",Range(0,3)) = 1

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

Cull Off ZWrite Off ZTest Always

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

TEXTURE2D(_CameraDepthNormalsTexture);

SAMPLER(sampler_CameraDepthNormalsTexture);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _MainTex_TexelSize;

float4 _EdgeColor;

float4 _BackgroundColor;

float _EdgeOnly;

float _SampleDistance;

float _SensitivityDepth;

float _SensitivityNormals;

CBUFFER_END

struct Attributes

{

float4 positionOS:POSITION;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

};

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = i.uv;

return o;

}

float CheckSame(float2 centerNormal, float centerDepth, float2 sampleNormal, float sampleDepth)

{

float2 diffNormal = abs(centerNormal - sampleNormal) * _SensitivityNormals;

float diffDepth = abs(centerDepth - sampleDepth) * _SensitivityDepth;

int isSameNormal = (diffNormal.x + diffNormal.y) < 0.1f;

int isSameDepth = diffDepth < 0.1 * centerNormal;

return isSameNormal * isSameDepth ? 1.0 : 0.0;

}

float DecodeFloatRG(float2 enc)

{

float2 kDecodeDot = float2(1.0, 1 / 255.0);

return dot(enc, kDecodeDot);

}

float3 DecodeViewNormalStereo(float4 enc4)

{

float kScale = 1.7777;

float3 nn = enc4.xyz * float3(2 * kScale, 2 * kScale, 0) + float3(-kScale, -kScale, 1);

float g = 2.0 / dot(nn.xyz, nn.xyz);

float3 n;

n.xy = g * nn.xy;

n.z = g - 1;

return n;

}

float sobel(Varyings i)

{

float2 uv[9];

float2 normal[9];

float depth[9];

uv[0] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (-1, -1);

uv[1] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (0, -1);

uv[2] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (1, -1);

uv[3] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (-1, 0);

uv[4] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (0, 0);

uv[5] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (1, 0);

uv[6] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (-1, 1);

uv[7] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (0, 1);

uv[8] = i.uv + _SampleDistance * _MainTex_TexelSize.xy * (1, 1);

for (int it = 0; it < 9; it++)

{

real4 depthnormalTex = SAMPLE_TEXTURE2D(_CameraDepthNormalsTexture, sampler_CameraDepthNormalsTexture, uv[it]);

normal[it] = depthnormalTex.xy; //临时法线 没使用DecodeViewNormalStereo,使用后有问题...

depth[it] = DecodeFloatRG(depthnormalTex.zw); // depthnormalTex.z * 1.0 + depthnormalTex.w / 255.0; //得到线性深度

}

float edge = 1;

edge *= CheckSame(normal[0], depth[0], normal[8], depth[8]);

edge *= CheckSame(normal[1], depth[1], normal[7], depth[7]);

edge *= CheckSame(normal[2], depth[2], normal[6], depth[6]);

edge *= CheckSame(normal[3], depth[3], normal[5], depth[5]);

return edge;

}

float4 Frag(Varyings i) :SV_Target{

float edge = sobel(i);

float4 color = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

float4 mainColor = lerp(_EdgeColor, color, edge);

float4 noMainColor = lerp(_EdgeColor, _BackgroundColor, edge);

return lerp(mainColor, noMainColor, _EdgeOnly);

}

ENDHLSL

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

}

}深度法线RenderFeather

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

/// <summary>

/// 获取深度法线

/// </summary>

public class DepthNormalsRenderFeather : ScriptableRendererFeature

{

class DepthNormalsRenderPass : ScriptableRenderPass

{

private RenderTargetHandle destination { get; set; }

private Material depthNormalsMaterial = null;

private FilteringSettings m_FilteringSettings;

ShaderTagId m_ShaderTagId = new ShaderTagId("DepthOnly");

public DepthNormalsRenderPass(RenderQueueRange renderQueueRange, LayerMask layerMask, Material material)

{

m_FilteringSettings = new FilteringSettings(renderQueueRange, layerMask);

this.depthNormalsMaterial = material;

}

public void Setup(RenderTargetHandle destination)

{

this.destination = destination;

}

public override void Configure(CommandBuffer cmd, RenderTextureDescriptor cameraTextureDescriptor)

{

RenderTextureDescriptor descriptor = cameraTextureDescriptor;

descriptor.depthBufferBits = 32;

descriptor.colorFormat = RenderTextureFormat.ARGB32;

cmd.GetTemporaryRT(destination.id, descriptor, FilterMode.Point);

ConfigureTarget(destination.Identifier());

ConfigureClear(ClearFlag.All, Color.black);

}

// Here you can implement the rendering logic.

// Use <c>ScriptableRenderContext</c> to issue drawing commands or execute command buffers

// https://docs.unity3d.com/ScriptReference/Rendering.ScriptableRenderContext.html

// You don't have to call ScriptableRenderContext.submit, the render pipeline will call it at specific points in the pipeline.

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

CommandBuffer cmd = CommandBufferPool.Get("深度法线获取pass");

using (new ProfilingSample(cmd, "DepthNormals Prepass"))

{

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

var sortFlags = renderingData.cameraData.defaultOpaqueSortFlags;

var drawSettings = CreateDrawingSettings(m_ShaderTagId, ref renderingData, sortFlags);

drawSettings.perObjectData = PerObjectData.None;

ref CameraData cameraData = ref renderingData.cameraData;

Camera camera = cameraData.camera;

if (cameraData.isStereoEnabled)

context.StartMultiEye(camera);

drawSettings.overrideMaterial = depthNormalsMaterial;

context.DrawRenderers(renderingData.cullResults, ref drawSettings,

ref m_FilteringSettings);

cmd.SetGlobalTexture("_CameraDepthNormalsTexture", destination.id);

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

}

// Cleanup any allocated resources that were created during the execution of this render pass.

public override void OnCameraCleanup(CommandBuffer cmd)

{

if (destination != RenderTargetHandle.CameraTarget)

{

cmd.ReleaseTemporaryRT(destination.id);

destination = RenderTargetHandle.CameraTarget;

}

}

}

DepthNormalsRenderPass m_ScriptablePass;

RenderTargetHandle depthNormalsTexture;

Material depthNormalsMaterial;

/// <inheritdoc/>

public override void Create()

{

depthNormalsMaterial = CoreUtils.CreateEngineMaterial("Hidden/Internal-DepthNormalsTexture");

m_ScriptablePass = new DepthNormalsRenderPass(RenderQueueRange.opaque, -1, depthNormalsMaterial);

// Configures where the render pass should be injected.

m_ScriptablePass.renderPassEvent = RenderPassEvent.AfterRenderingPrePasses;

depthNormalsTexture.Init("_CameraDepthNormalsTexture");

}

// Here you can inject one or multiple render passes in the renderer.

// This method is called when setting up the renderer once per-camera.

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

m_ScriptablePass.Setup(depthNormalsTexture);

renderer.EnqueuePass(m_ScriptablePass);

}

}

总结

- URP并不支持深度法线图,通过RenderFeather自己生成一张并设置为全局变量

- 代码中并没有使用DecodeViewNormalStereo函数,在测试过程中使用DecodeViewNormalStereo解码,实际效果不知道为什么出现问题(待解决)

但是可以直接使用为解码的法线xy,因为计算过程xy与解码后成正比,不影响实际结果

科幻扫描效果

链接

urp管线的自学hlsl之路 第二十三篇 科幻扫描效果前篇

urp管线的自学hlsl之路 第二十四篇 科幻扫描效果后篇

基础

首先要知道这是一个后处理,我们要做的是在屏幕上画线,再附加扫描

再屏幕上画线条需要区分可画区域与不可画区域,通过深度图来区分,只有有深度的地方才需要画线

画线需要与世界坐标系的x,y,z轴对应,目前我们不知道屏幕图像上的一点在世界坐标系的位置,

因此第一步需要计算屏幕像素点在世界坐标的实际位置,即重建世界坐标系。世界坐标通过深度值,相机世界坐标以及一个朝向确定: positionWS = _WorldSpaceCameraPos + depth * Direction; 求:Direction

这个过程相当于NDC反运算,通过near,far,fov计算 相机到近平面四个顶点的向量

计算过程:height = near * tan(fov / 2);

width = height * camera.aspect; //aspect为屏幕高宽比

fwd = camera.fwd * near;

right = camera.right * width;

up = camera.up * height;

四个向量为:

BottomLeft = fwd - right - up;

BottomRight = fwd + right - up;

UpLeft = fwd - right + up;

UpRight = fwd + right + up;

将结果线性变换:

float size = BottomLeft.magnitude / near;

BottomLeft = BottomLeft.normalize * size;

BottomRight = BottomRight.normalize * size;

UpLeft = UpLeft.normalize * size;

UpRight = UpRight.normalize * size;

将结果通过RenderFeature传入材质中。基于x,y,z轴画线:

- uv从左下角(0,0)到右上角(1,1),将屏幕划分为四个区域,分别取上面以及计算好的向量

这时候将渲染屏幕大小的片,其对应有四个顶点,按照uv划分设置朝向,

在片元着色器中,每个像素得到的朝向是经过四个顶点朝向插值完的结果,因此得到相机到像素世界坐标的朝向以及长度1

2

3

4

5

6

7

8

9

10int t = 0;

if (i.uv.x < 0.5 && i.uv.y < 0.5) // 左下

t = 0;

else if (i.uv.x > 0.5 && i.uv.y < 0.5) // 右下

t = 1;

else if (i.uv.x > 0.5 && i.uv.y > 0.5) // 左上

t = 2;

else // 右上

t = 3;

o.Direction = _Matrix[t].xyz; - 通过上面的分析得到向量后,能直接得到positionWS = _WorldSpaceCameraPos + depth * Direction + float3(0.01,0.01,0.01); //增加一点偏移是画出线显示在实际物体上面

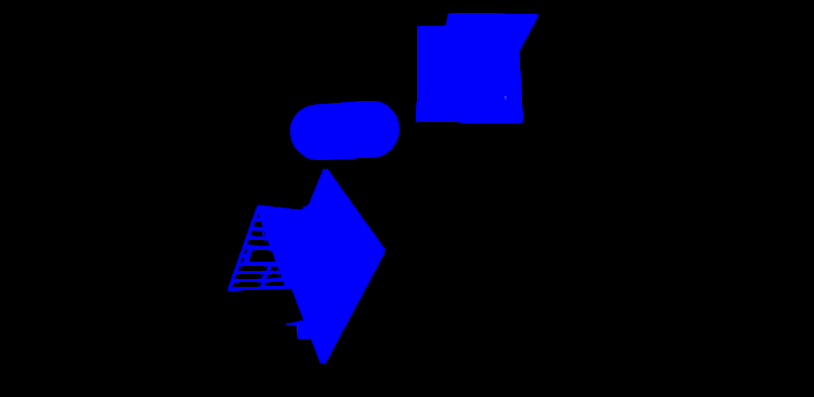

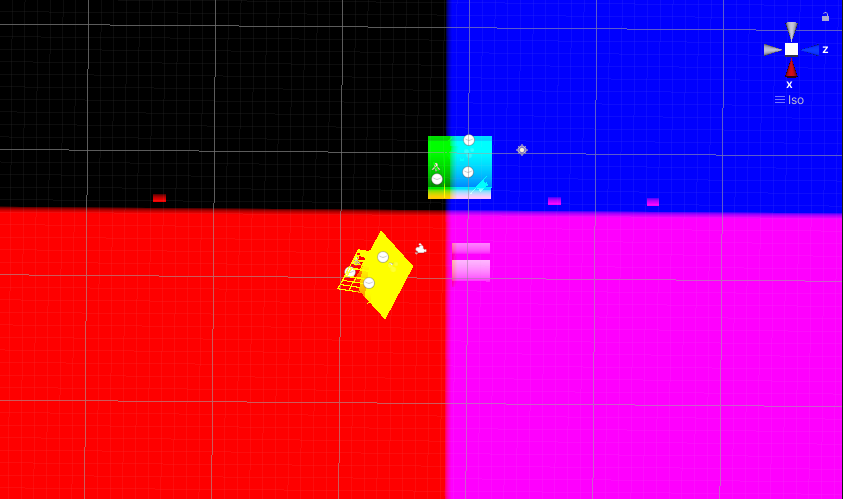

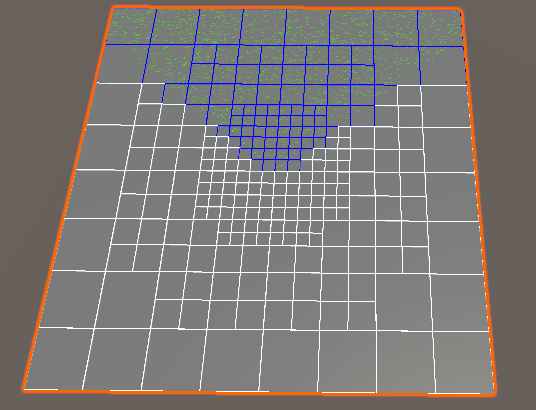

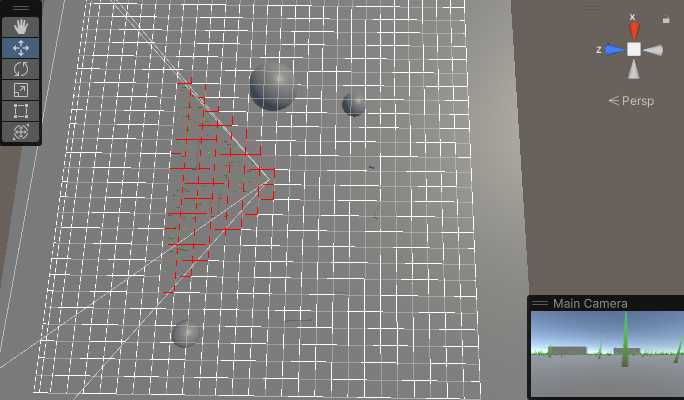

输出positionWS查看效果:重新构建的世界坐标系将屏幕划分成四块

将positionWS取frac,0-1:变成条状了

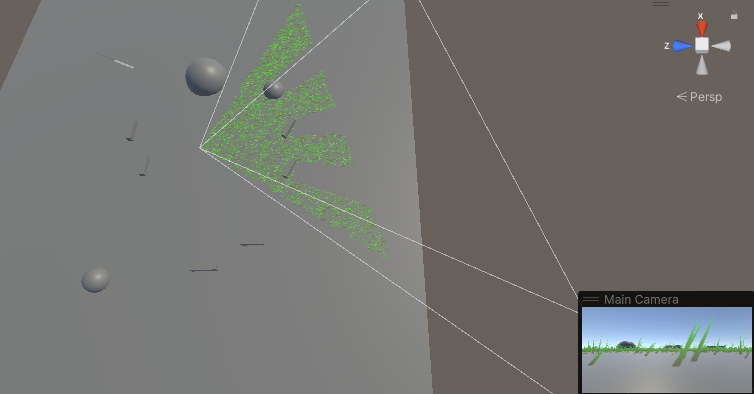

使用step裁剪掉大部分0.98:最后就变成了线,可以看到3种类型线,分别对应x,y,z轴

赋予颜色:线条变得更加明显了,这些线就代表世界坐标重构之后的x,y,z轴,其对应间隔为11

2float3 Line = step(0.98, frac(positionWS));

float3 LineColor = Line.x * _ColorX + Line.y * _ColorY + Line.z * _ColorZ;

描边:通过上面学习的基于屏幕深度,深度法线做描边检测即可

扫描效果:类似上面做过的护盾扫描效果

float flow=saturate(pow(1-abs(frac(i.positionWS.y0.3-_Time.y0.2)-0.5),10)0.3);

对其魔改一下,原来的效果为,0-1-0的渐变,扫描时我们通常使用1-0渐变,因此舍弃一部分渐变:

float mask=saturate(pow(abs(frac(positionWS.x + _Time.y0.2)-0.75),10)*0.3);

代码

RenderFeature

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

/// <summary>

/// 科幻扫描效果

/// </summary>

public class ScanRenderFeather : ScriptableRendererFeature

{

class ScanRenderPass : ScriptableRenderPass

{

private Setting setting;

private RenderTargetIdentifier source;

private Material mat;

public void Setup(Setting setting)

{

this.setting = setting;

mat = new Material(setting.shader);

renderPassEvent = setting.Event;

}

public void Set(RenderTargetIdentifier source)

{

this.source = source;

mat.SetColor("_ColorX", setting.ColorX);

mat.SetColor("_ColorY", setting.ColorY);

mat.SetColor("_ColorZ", setting.ColorZ);

mat.SetColor("_ColorEdge", setting.ColorEdge);

mat.SetColor("_OutlineColor", setting.ColorOutline);

mat.SetFloat("_Width", setting.Width);

mat.SetFloat("_Spacing", setting.Spacing);

mat.SetFloat("_Speed", setting.Speed);

mat.SetFloat("_EdgeSample", setting.EdgeSample);

mat.SetFloat("_NormalSensitivity", setting.NormalSensitivity);

mat.SetFloat("_DepthSensitivity", setting.DepthSensitivity);

if (setting.AXIS == AxisType.X)

{

mat.DisableKeyword("_AXIS_Y");

mat.DisableKeyword("_AXIS_Z");

mat.EnableKeyword("_AXIS_X");

}

else if (setting.AXIS == AxisType.Y)

{

mat.DisableKeyword("_AXIS_Z");

mat.DisableKeyword("_AXIS_X");

mat.EnableKeyword("_AXIS_Y");

}

else

{

mat.DisableKeyword("_AXIS_X");

mat.DisableKeyword("_AXIS_Y");

mat.EnableKeyword("_AXIS_Z");

}

}

// This method is called before executing the render pass.

// It can be used to configure render targets and their clear state. Also to create temporary render target textures.

// When empty this render pass will render to the active camera render target.

// You should never call CommandBuffer.SetRenderTarget. Instead call <c>ConfigureTarget</c> and <c>ConfigureClear</c>.

// The render pipeline will ensure target setup and clearing happens in a performant manner.

public override void OnCameraSetup(CommandBuffer cmd, ref RenderingData renderingData)

{

}

// Here you can implement the rendering logic.

// Use <c>ScriptableRenderContext</c> to issue drawing commands or execute command buffers

// https://docs.unity3d.com/ScriptReference/Rendering.ScriptableRenderContext.html

// You don't have to call ScriptableRenderContext.submit, the render pipeline will call it at specific points in the pipeline.

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

int temp = Shader.PropertyToID("temp");

CommandBuffer cmd = CommandBufferPool.Get("扫描特效");

RenderTextureDescriptor desc = renderingData.cameraData.cameraTargetDescriptor;

Camera cam = renderingData.cameraData.camera;

float height = cam.nearClipPlane * Mathf.Tan(Mathf.Deg2Rad * cam.fieldOfView * 0.5f);

Vector3 up = cam.transform.up * height;

Vector3 right = cam.transform.right * height * cam.aspect;

Vector3 forward = cam.transform.forward * cam.nearClipPlane;

Vector3 ButtomLeft = forward - right - up;

Vector3 ButtomRight = forward + right - up;

Vector3 TopRight = forward + right + up;

Vector3 TopLeft = forward - right + up;

float scale = ButtomLeft.magnitude / cam.nearClipPlane;

ButtomLeft = ButtomLeft.normalized * scale;

ButtomRight = ButtomRight.normalized * scale;

TopRight = TopRight.normalized * scale;

TopLeft = TopLeft.normalized * scale;

Matrix4x4 MATRIX = new Matrix4x4();

MATRIX.SetRow(0, ButtomLeft);

MATRIX.SetRow(1, ButtomRight);

MATRIX.SetRow(2, TopRight);

MATRIX.SetRow(3, TopLeft);

mat.SetMatrix("_Matrix", MATRIX);

cmd.GetTemporaryRT(temp, desc);

cmd.Blit(source, temp, mat);

cmd.Blit(temp, source);

context.ExecuteCommandBuffer(cmd);

cmd.ReleaseTemporaryRT(temp);

CommandBufferPool.Release(cmd);

}

// Cleanup any allocated resources that were created during the execution of this render pass.

public override void OnCameraCleanup(CommandBuffer cmd)

{

}

}

ScanRenderPass m_ScriptablePass;

public enum AxisType

{

X,

Y,

Z

}

[System.Serializable]

public class Setting

{

public Shader shader = null;

public RenderPassEvent Event = RenderPassEvent.AfterRenderingTransparents;

[ColorUsage(true, true)] public Color ColorX = Color.white;

[ColorUsage(true, true)] public Color ColorY = Color.white;

[ColorUsage(true, true)] public Color ColorZ = Color.white;

[ColorUsage(true, true)] public Color ColorEdge = Color.white;

[ColorUsage(true, true)] public Color ColorOutline = Color.white;

[Range(0, 0.2f), Tooltip("线框宽度")] public float Width = 0.1f;

[Range(0.1f, 10), Tooltip("线框间距")] public float Spacing = 1;

[Range(0, 10), Tooltip("滚动速度")] public float Speed = 1;

[Range(0, 3), Tooltip("边缘采样半径")] public float EdgeSample = 1;

[Range(0, 3), Tooltip("法线灵敏度")] public float NormalSensitivity = 1;

[Range(0, 3), Tooltip("深度灵敏度")] public float DepthSensitivity = 1;

[Tooltip("特效方向")] public AxisType AXIS;

}

[SerializeField]

public Setting setting;

public override void Create()

{

m_ScriptablePass = new ScanRenderPass();

m_ScriptablePass.Setup(setting);

}

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

m_ScriptablePass.Set(renderer.cameraColorTarget);

renderer.EnqueuePass(m_ScriptablePass);

}

}Shader

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153Shader "Unlit/Scan"

{

Properties

{

[HideInInspector] _MainTex("MainTex",2D) = "white"{}

[HDR]_ColorX("ColorX",Color) = (1,1,1,1)

[HDR]_ColorY("ColorY",Color) = (1,1,1,1)

[HDR]_ColorZ("ColorZ",Color) = (1,1,1,1)

[HDR]_ColorEdge("ColorEdge",Color) = (1,1,1,1)

_Width("Width",float) = 0.02

_Spacing("Spacing",float) = 1

_Speed("Speed",float) = 1

_EdgeSample("EdgeSample",Range(0,1)) = 1

_NormalSensitivity("NormalSensitivity",float) = 1

_DepthSensitivity("DepthSensitivity",float) = 1

[HDR]_OutlineColor("OutlineColr",Color) = (1,1,1,1)

}

SubShader

{

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

Cull Off ZWrite Off ZTest Always

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Lighting.hlsl"

TEXTURE2D(_MainTex);

SAMPLER(sampler_MainTex);

// 使用_CameraDepthNormalsTexture取代深度图

// _CameraDepthNormalsTexture.xy法线信息,zw存深度信息,线性深度=z+w/255

TEXTURE2D(_CameraDepthTexture);

SAMPLER(sampler_CameraDepthTexture);

TEXTURE2D(_CameraDepthNormalsTexture);

SAMPLER(sampler_CameraDepthNormalsTexture);

CBUFFER_START(UnityPerMaterial)

float4 _MainTex_ST;

float4 _MainTex_TexelSize;

real4 _ColorX;

real4 _ColorY;

real4 _ColorZ;

real4 _ColorEdge;

real4 _OutlineColor;

float _Width;

float _Spacing;

float _Speed;

float _EdgeSample;

float _NormalSensitivity;

float _DepthSensitivity;

CBUFFER_END

float4x4 _Matrix;

struct Attributes

{

float4 positionOS:POSITION;

float2 uv : TEXCOORD0;

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

float3 Direction:TEXCOORD1;

};

Varyings Vert(Attributes i)

{

Varyings o;

o.positionHS = TransformObjectToHClip(i.positionOS.xyz);

o.uv = i.uv;

int t = 0;

if (i.uv.x < 0.5 && i.uv.y < 0.5)

t = 0;

else if (i.uv.x > 0.5 && i.uv.y < 0.5)

t = 1;

else if (i.uv.x > 0.5 && i.uv.y > 0.5)

t = 2;

else

t = 3;

o.Direction = _Matrix[t].xyz;

return o;

}

// 主要用于描边检测

int sobel(Varyings i)//定义索伯检测函数

{

real depth[4];

real2 normal[4];

float2 uv[4];//计算采样需要的uv

uv[0] = i.uv + float2(-1, -1) * _EdgeSample * _MainTex_TexelSize.xy;

uv[1] = i.uv + float2(1, -1) * _EdgeSample * _MainTex_TexelSize.xy;

uv[2] = i.uv + float2(-1, 1) * _EdgeSample * _MainTex_TexelSize.xy;

uv[3] = i.uv + float2(1, 1) * _EdgeSample * _MainTex_TexelSize.xy;

for (int t = 0; t < 4; t++)

{

real4 depthnormalTex = SAMPLE_TEXTURE2D(_CameraDepthNormalsTexture, sampler_CameraDepthNormalsTexture, uv[t]);

normal[t] = depthnormalTex.xy;//得到临时法线

depth[t] = depthnormalTex.z * 1.0 + depthnormalTex.w / 255.0;//得到线性深度

}

//depth检测

int Dep = abs(depth[0] - depth[3]) * abs(depth[1] - depth[2]) * _DepthSensitivity > 0.01 ? 1 : 0;

//normal检测

float2 nor = abs(normal[0] - normal[3]) * abs(normal[1] - normal[2]) * _NormalSensitivity;

int Nor = (nor.x + nor.y) > 0.01 ? 1 : 0;

return saturate(Dep + Nor);

}

float4 Frag(Varyings i) :SV_Target{

int outline = sobel(i);

real4 tex = SAMPLE_TEXTURE2D(_MainTex, sampler_MainTex, i.uv);

real4 depthnormal = SAMPLE_TEXTURE2D(_CameraDepthNormalsTexture, sampler_CameraDepthNormalsTexture, i.uv);

float depth01 = depthnormal.z * 1.0 + depthnormal.w / 255.0;

depth01 *= _ProjectionParams.z;

//float depth01 = LinearEyeDepth(SAMPLE_TEXTURE2D(_CameraDepthTexture, sampler_CameraDepthTexture, i.uv).x, _ZBufferParams).x;

float3 positionWS = _WorldSpaceCameraPos + depth01 * i.Direction + float3(0.01, 0.01, 0.01); //增加一点偏移是画出线显示在实际物体上面

float3 positionWS01 = positionWS * _ZBufferParams.w;

float3 Line = step(1 - _Width, frac(positionWS / _Spacing));

float4 LineColor = Line.x * _ColorX + Line.y * _ColorY + Line.z * _ColorZ + outline * _OutlineColor;

#ifdef _AXIS_X

float mask = saturate(pow(abs(frac(positionWS01.x * 10 + _Time.y * 0.1 * _Speed) - 0.53), 10) * 200);

float mask2 = saturate(pow(abs(frac(positionWS01.x * 10 - _Time.y * 0.1 * _Speed) - 0.47), 10) * 200);

float mask3 = saturate(pow(abs(frac(positionWS01.z * 10 + _Time.y * 0.1 * _Speed) - 0.53), 10) * 200);

float mask4 = saturate(pow(abs(frac(positionWS01.z * 10 - _Time.y * 0.1 * _Speed) - 0.47), 10) * 200);

mask += mask2 + mask3 + mask4;

mask += step(0.95, mask);

#elif _AXIS_Y

float mask = saturate(pow(abs(frac(positionWS01.y * 10 + _Time.y * 0.1 * _Speed) - 0.75), 10) * 10);

mask += step(0.95, mask);

#elif _AXIS_Z

float mask = saturate(pow(abs(frac(positionWS01.z * 10 + _Time.y * 0.1 * _Speed) - 0.75), 10) * 10);

mask += step(0.95, mask);

#endif

return tex * saturate(1 - mask) + (LineColor + _ColorEdge) * mask; // 扫描加入一个覆盖颜色更真实

}

ENDHLSL

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

#pragma multi_compile_local _AXIS_X _AXIS_Y _AXIS_Z

ENDHLSL

}

}

}

总结

- 通过屏幕像素点获得对应的世界坐标,在后处理中特别实用,如全局雾效,SSAO等

- 扫描效果与之前护盾扫描类似,扫描公式大概都以这样的为准,在此基础上进行魔改

屏幕炫光,更好的广告牌算法

基础

- 使用模型空间(0,0,0,1)点做MV变换后得相机空间坐标,加上原先顶点的模型偏移,乘P矩阵得最终顶点坐标

基础广告牌已经完成,变得简单多了1

2

3float4 pivotWS = mul(UNITY_MATRIX_M, float4(0, 0, 0, 1));

float4 pivotVS = mul(UNITY_MATRIX_V, pivotWS);

o.positionHS = mul(UNITY_MATRIX_P, pivotVS + float4(i.positionOS.xy, 0, 1)); - 加入旋转以及缩放控制,目前对顶点进行变换并不会允许缩放以及旋转的控制

思路:在模型空间对其x,y坐标做缩放,旋转后(广告牌不需要z值),再放入顶点变换计算中

- 二维旋转矩阵:

{

cos(a),-sin(a),

sin(a),cos(a)

} - 获得GameObject的世界坐标缩放

float ScaleX = length(float3(UNITY_MATRIX_M[0].x, UNITY_MATRIX_M[1].x, UNITY_MATRIX_M[2].x));

float ScaleY = length(float3(UNITY_MATRIX_M[0].y, UNITY_MATRIX_M[1].y, UNITY_MATRIX_M[2].y));

float ScaleZ = length(float3(UNITY_MATRIX_M[0].z, UNITY_MATRIX_M[1].z, UNITY_MATRIX_M[2].z)); - 需要与unity顺序一致:先缩放,后旋转

- 制作炫光,加入一个渐隐渐现效果

- 制作渐隐渐现只需要一个值来控制(alpha),怎么求得alpha

- 物体中心点周围一个范围, 采样屏幕深度纹理,判断深度值,被遮挡不通过

- 透明值 = 没被遮挡数 / 采样总数 // alpha = passCount / totalSampleCount;

- 裁剪空间坐标,透除:pos.xy / pos.w

代码

1 | Shader "Unlit/ADS Pro" |

总结

- 取线性深度可以通过相机空间的-z值获取

- 判断平台差异,倒转y,UNITY_UV_STARTS_AT_TOP

屏幕空间贴花

基础

- Unity官方从2021.2版本开始才提供了 Unity URP官方文档2021.2版本

- 贴花组件 Decal Projector Component

- Shader使用:Shader Graphs/Decal

- 使用时打开RenderFeather: Decal

VisualEffectGraph粒子系统里提供了内置的ForwardDecal

自己实现一个屏幕空间贴画

代码

1 | Shader "Unlit/Decal" |

总结

- ddx,ddy函数解释:对屏幕坐标x和y方向的偏导数

ddx(v) = 该像素点右边的v值 - 该像素点的v值

ddy(v) = 该像素点下面的v值 - 该像素点的v值

ddx(float3(1,2,3)) = float3(0,0,0) //因为使用该shader的所有像素 输出的记录值都是 float3(1,2,3)那么差值就为float3(0,0,0)

即理解为:ddx(v),在屏幕上水平方向横跨一个像素的v值变化量,ddy,则在垂直方向上b的变化量

解释:代码中使用 float3 decalNormal = normalize(cross(ddy(decalPos), ddx(decalPos)));

拿到模型空间decalPos后,ddy(decalPos)求得其往下的向量,ddx(decalPos)求得往右的向量,相当于得到decalPos在其相对坐标上的的x,y向量,叉乘得到z向量(垂直与两个向量)

可以近似理解为:ddx(decalPos) = (u+1,v)求出来的decalPos - (u,v)求出来的decalPos,在使用中相当于pos2-pos1,得到pos1到pos2的向量

- 防止以后绕晕了再记录一下,以下M,V代表变换矩阵,I_M,I_V代表转置

positionVS = mul(v * mul(M, positionOS)); positionOS = mul(I_M, mul(I_V, positionVS);

将变换过程理解为: positionOS -> M -> V = positionVS; 那么回去需要原路返回:positionVS -> I_V -> I_M = positionOS;

SSAO屏幕空间环境光遮蔽

基础理论

参考 com.unity.render-pipelines.universal@12.1.1\ShaderLibrary\SSAO.hlsl

环境遮罩之SSAO原理

URP屏幕空间环境光遮蔽后处理(SSAO)

【光线追踪系列十六】基于着色点的正向半球随机方向生成

- 首先需要明确一点将所有坐标都转换至相机空间操作。

通过屏幕uv获取像素深度值,在裁剪空间中深度值就为其z轴的值,根据uv以及depth重建像素在相机空间的坐标

屏幕uv从0到1,裁剪空间uv从-1到1,将uv*2-1,范围变换至-1到1就为裁剪空间xy坐标。

通过renderingData.cameraData.GetGPUProjectionMatrix()获得当前相机P矩阵,以及P逆矩阵

通过逆矩阵求得VS坐标,进行齐次除法1

2

3

4

5

6

7//根据UV和depth,重建像素在viewspace中的坐标

float3 ReconstructPositionVS(float2 uv, float depth) {

float4 positionInHS = float4(uv * 2 - 1, depth, 1);

float4 positionVS = mul(CustomInvProjMatrix, positionInHS);

positionVS /= positionVS.w;

return positionVS.xyz;

}得到当前像素的VS坐标后,需要在沿其法线方向的半球中随机采样点,然后计算对当前像素的ao影响

规定半球半径_SampleRadius,采样点数量_SampleCount,随机半球可以参照【光线追踪系列十六】基于着色点的正向半球随机方向生成1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18float Random(float2 st) {

return frac(sin(dot(st, float2(12.9898, 78.233))) * 43758.5453123);

}

float Random(float x) {

return frac(sin(x) * 43758.5453123);

}

// 随机球

float3 RandomSphere(float3 positionVS, float index)

{

float r1 = Random(positionVS.xy);

float r2 = Random(index);

float z = sqrt(1 - r2);

float th = 2 * PI * r1;

float x = cos(th) * sqrt(r2);

float y = sin(th) * sqrt(r2);

return float3(x, y, z);

}先随机一个半球的单位向量,再将其转换至视觉空间,将偏移加载像素VS坐标上,通过偏移后的VS再获得当前屏幕uv,采样该uv随机点的深度值,再重新构建VS坐标

1

2

3

4

5

6

7

8

9

10

11float2 ReProjectToUV(float3 positionVS) {

float4 positionHS = mul(CustomProjMatrix, float4(positionVS, 1));

return (positionHS.xy / positionHS.w + 1) * 0.5;

}

float3 offset = RandomSphere(positionVS, it);

offset = normalize(mul(TBN, offset));

float3 samplePositionVS = positionVS + offset * _SampleRadius;

float2 sampleUV = ReProjectToUV(samplePositionVS);

float sampleDepth = SampleDepth(sampleUV);

float3 hitPositionVS = ReconstructPositionVS(sampleUV, sampleDepth);获取到随机采样点后,计算该点对当前像素的ao影响值

代码

1 | Shader "Unlit/SSAO" |

总结

屏幕空间操作指南:

通过uv,depth,重构世界坐标,需要InvVP,vp逆矩阵,(相机空间坐标同理) 都先得到裁剪空间坐标再进行对应的变换:positionCS = P * V * M * positionOS

1

2

3

4

5

6float3 ReconstructPositionWS(float2 uv, float depth) {

float3 positionCS = float3(uv * 2 - 1, depth);

float4 positionWS = mul(_MatrixInvVP, float4(positionCS, 1));

positionWS /= positionWS.w;

return positionWS.xyz;

}同样可以从世界坐标转换至裁剪坐标CS,裁剪坐标的xy范围(0-1)的uv值,z值为深度值

1

2

3

4

5

6float3 Reproject(float3 positionWS) {

float4 positionCS = mul(_MatrixVP, float4(positionWS, 1));

positionCS /= positionCS.w;

positionCS.xy = (positionCS.xy + 1) * 0.5;

return positionCS.xyz;

}裁剪空间得到屏幕uv

1

float2 pixelCoord = positionCS.xy * _MainTex_TexelSize.zw;

SSPR屏幕空间平面反射

基础

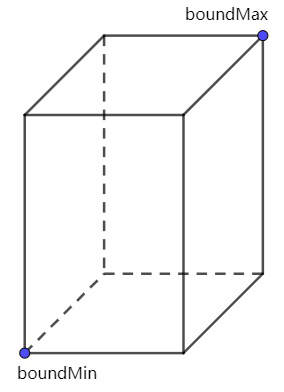

- 记录需要平面反射的平面,世界坐标以及法线,针对所有平面都有以下操作(一个坐标一个法线确定一个平面)

- 通过屏幕uv以及depth,重构世界坐标系

- 在ComputeShader中做反转变换:将世界坐标沿平面反转,得到新的反转点后再转换至屏幕空间得到uv2

- uv2的颜色就是反射uv1的颜色

- 将ComputShader反转后的图像,用于平面的渲染,渲染是需要判断当前深度》屏幕深度,则渲染图像上的颜色

代码

shader:需要反射的平面,对其反射处理使用的shader

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82Shader "URPLearn/PlanarReflection"

{

Properties

{

}

SubShader

{

ZTest Always ZWrite Off Cull Off

Tags { "RenderType" = "Opaque" "RenderPipeline" = "UniversalPipeline"}

Blend One One

HLSLINCLUDE

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Common.hlsl"

#include "Packages/com.unity.render-pipelines.core/ShaderLibrary/Filtering.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Input.hlsl"

struct Attributes

{

float4 positionOS : POSITION;

float2 uv : TEXCOORD0;

UNITY_VERTEX_INPUT_INSTANCE_ID

};

struct Varyings

{

float4 positionHS : SV_POSITION;

float2 uv : TEXCOORD0;

float4 screenPos : TEXCOORD1;

UNITY_VERTEX_OUTPUT_STEREO

};

Varyings Vert(Attributes input)

{

Varyings output;

UNITY_SETUP_INSTANCE_ID(input);

UNITY_INITIALIZE_VERTEX_OUTPUT_STEREO(output);

output.positionHS = TransformObjectToHClip(input.positionOS);

output.uv = input.uv;

output.screenPos = ComputeScreenPos(output.positionHS);

return output;

}

TEXTURE2D(_ReflectionTex);

TEXTURE2D(_CameraDepthTexture);

SAMPLER(sampler_ReflectionTex);

SAMPLER(sampler_CameraDepthTexture);

CBUFFER_START(UnityPerMaterial)

CBUFFER_END

float4 Frag(Varyings i) : SV_Target

{

float2 screenUV = i.screenPos.xy / i.screenPos.w;

float depth = SAMPLE_TEXTURE2D(_CameraDepthTexture, sampler_CameraDepthTexture, screenUV);

if (i.positionHS.z >= depth) {

float4 color = SAMPLE_TEXTURE2D_X(_ReflectionTex, sampler_ReflectionTex, screenUV);

return color;

}

else {

discard;

return float4(0,0,0,0);

}

}

ENDHLSL

Pass

{

HLSLPROGRAM

#pragma vertex Vert

#pragma fragment Frag

ENDHLSL

}

}

}RenderFeather:对标记的平面做反射以及再次渲染

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241using System;

using UnityEngine;

using UnityEngine.Rendering;

using UnityEngine.Rendering.Universal;

namespace URPLearn

{

public class SSPRRenderFeather : ScriptableRendererFeature

{

class SSPRPlanarRenderPass : ScriptableRenderPass

{

private Material _material;

private SSPRTexGenerator _ssprTexGenerator = new SSPRTexGenerator();

private PlanarRendererGroups _planarRendererGroups = new PlanarRendererGroups();

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

CommandBuffer cmd = CommandBufferPool.Get("SSPR-ReflectionTex");

ReflectPlane.GetVisiblePlanarGroups(_planarRendererGroups);

foreach (var group in _planarRendererGroups.PlanarRenderers)

{

cmd.Clear();

var planarDescriptor = group.descriptor;

var renderers = group.renderers;

_ssprTexGenerator.Render(cmd, this.colorAttachment, ref renderingData, ref group.descriptor);

cmd.SetRenderTarget(this.colorAttachment, this.depthAttachment);

foreach (var rd in renderers)

{

cmd.DrawRenderer(rd, _material);

}

_ssprTexGenerator.ReleaseTemporary(cmd);

context.ExecuteCommandBuffer(cmd);

}

cmd.Release();

}

public void Setup(Material material, ComputeShader computeShader, bool blur, bool excludeBackground)

{

_material = material;

_ssprTexGenerator.BindCS(computeShader);

_ssprTexGenerator.enableBlur = blur;

_ssprTexGenerator.excludeBackground = excludeBackground;

}

}

[SerializeField]

private Material _material;

[SerializeField]

private ComputeShader _computeShader;

[SerializeField]

private bool _blur;

[SerializeField]

private bool _excludeBackground;

SSPRPlanarRenderPass m_ScriptablePass;

/// <inheritdoc/>

public override void Create()

{

m_ScriptablePass = new SSPRPlanarRenderPass();

m_ScriptablePass.renderPassEvent = RenderPassEvent.BeforeRenderingPostProcessing;

}

// Here you can inject one or multiple render passes in the renderer.

// This method is called when setting up the renderer once per-camera.

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

if (renderingData.cameraData.renderType != CameraRenderType.Base)

{

return;

}

if (_material == null || _computeShader == null)

{

return;

}

m_ScriptablePass.Setup(_material, _computeShader, _blur, _excludeBackground);

m_ScriptablePass.ConfigureTarget(renderer.cameraColorTarget, renderer.cameraDepthTarget);

renderer.EnqueuePass(m_ScriptablePass);

}

}

public class SSPRTexGenerator

{

private static class ShaderProperties

{

public static readonly int Result = Shader.PropertyToID("_Result");

public static readonly int CameraColorTexture = Shader.PropertyToID("_CameraColorTexture");

public static readonly int PlanarPosition = Shader.PropertyToID("_PlanarPosition");

public static readonly int PlanarNormal = Shader.PropertyToID("_PlanarNormal");

public static readonly int MatrixVP = Shader.PropertyToID("_MatrixVP");

public static readonly int MatrixInvVP = Shader.PropertyToID("_MatrixInvVP");

public static readonly int MainTexelSize = Shader.PropertyToID("_MainTex_TexelSize");

}

private ComputeShader _computeShader;

private int _reflectionTexID;

private int _kernelClear;

private int _kernalPass1;

private int _kernalPass2;

/// <summary>

/// 在生成反射贴图的时候,是否剔除掉无穷远的像素(例如天空盒)

/// </summary>

private bool _excludeBackground;

/// <summary>

/// 模糊

/// </summary>

private bool _enableBlur;

private BlurBlitter _blurBlitter = new BlurBlitter();

public SSPRTexGenerator(string reflectTexName = "_ReflectionTex")

{

_reflectionTexID = Shader.PropertyToID(reflectTexName);

}

public void BindCS(ComputeShader cp)

{

_computeShader = cp;

this.UpdateKernelIndex();

}

private void UpdateKernelIndex()

{

_kernelClear = _computeShader.FindKernel("Clear");

_kernalPass1 = _computeShader.FindKernel("DrawReflectionTex1");

_kernalPass2 = _computeShader.FindKernel("DrawReflectionTex2");

if (_excludeBackground)

{

_kernalPass1 += 2;

_kernalPass2 += 2;

}

}

public bool excludeBackground

{

get

{

return _excludeBackground;

}

set

{

_excludeBackground = value;

if (_computeShader)

{

this.UpdateKernelIndex();

}

}

}

public bool enableBlur

{

get

{

return _enableBlur;

}

set

{

_enableBlur = value;

}

}

public void Render(CommandBuffer cmd, RenderTargetIdentifier id, ref RenderingData renderingData, ref PlanarDescriptor planarDescriptor)

{

if (_computeShader == null)

{

Debug.LogError("请设置CS");

return;

}

var reflectionTexDes = renderingData.cameraData.cameraTargetDescriptor;

reflectionTexDes.enableRandomWrite = true;

reflectionTexDes.msaaSamples = 1;

cmd.GetTemporaryRT(_reflectionTexID, reflectionTexDes);

var rtWidth = reflectionTexDes.width;

var rtHeight = reflectionTexDes.height;

// V矩阵

Matrix4x4 v = renderingData.cameraData.camera.worldToCameraMatrix;

// 还不清楚:为什么不直接使用renderingData.cameraData.GetProjectionMatrix()

Matrix4x4 p = GL.GetGPUProjectionMatrix(renderingData.cameraData.GetProjectionMatrix(), false);

// MVP矩阵变换过程都是 右乘向量,所以VP = p * v;

var matrixVP = p * v;

var invMatrixVP = matrixVP.inverse;

// computeShader 中thread组设置为(8,8,1)

int threadGroupX = reflectionTexDes.width / 8;

int threadGroupY = reflectionTexDes.height / 8;

RenderTargetIdentifier cameraColorTex = id;

// computeshader参数设置

cmd.SetComputeVectorParam(_computeShader, ShaderProperties.MainTexelSize, new Vector4(1.0f / rtWidth, 1.0f / rtHeight, rtWidth, rtHeight));

cmd.SetComputeVectorParam(_computeShader, ShaderProperties.PlanarPosition, planarDescriptor.position);

cmd.SetComputeVectorParam(_computeShader, ShaderProperties.PlanarNormal, planarDescriptor.normal);

cmd.SetComputeMatrixParam(_computeShader, ShaderProperties.MatrixVP, matrixVP);

cmd.SetComputeMatrixParam(_computeShader, ShaderProperties.MatrixInvVP, invMatrixVP);

// Texture相关参数都只能对应kernel设置

cmd.SetComputeTextureParam(_computeShader, _kernelClear, ShaderProperties.Result, _reflectionTexID);

cmd.DispatchCompute(_computeShader, _kernelClear, threadGroupX, threadGroupY, 1);

// Pass1 对像素做反转

cmd.SetComputeTextureParam(_computeShader, _kernalPass1, ShaderProperties.CameraColorTexture, cameraColorTex);

cmd.SetComputeTextureParam(_computeShader, _kernalPass1, ShaderProperties.Result, _reflectionTexID);

cmd.DispatchCompute(_computeShader, _kernalPass1, threadGroupX, threadGroupY, 1);

// Pass2 修理反转后像素的遮挡问题

cmd.SetComputeTextureParam(_computeShader, _kernalPass2, ShaderProperties.CameraColorTexture, cameraColorTex);

cmd.SetComputeTextureParam(_computeShader, _kernalPass2, ShaderProperties.Result, _reflectionTexID);

cmd.DispatchCompute(_computeShader, _kernalPass2, threadGroupX, threadGroupY, 1);

if (_enableBlur)

{

_blurBlitter.SetSource(_reflectionTexID, reflectionTexDes);

_blurBlitter.blurType = BlurType.BoxBilinear;

_blurBlitter.iteratorCount = 1;

_blurBlitter.downSample = 1;

_blurBlitter.Render(cmd);

}

// 将结果图片设置为全局(在当前cmd内都能直接获取)

cmd.SetGlobalTexture(_reflectionTexID, _reflectionTexID);

}

public void ReleaseTemporary(CommandBuffer cmd)

{

cmd.ReleaseTemporaryRT(_reflectionTexID);

}

}

}ReflectPlane:标记哪些平面需要反射

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using UnityEngine;

namespace URPLearn

{

/// <summary>

/// 一个平面,平面由一个点和法线来确定

/// </summary>

public struct PlanarDescriptor

{

public Vector3 position;

public Vector3 normal;

public static bool operator ==(PlanarDescriptor p1, PlanarDescriptor p2)

{

return IsNormalEqual(p1.normal, p2.normal) && IsPositionInPlanar(p1.position, p2);

}

public static bool operator !=(PlanarDescriptor p1, PlanarDescriptor p2)

{

return !IsNormalEqual(p1.normal, p2.normal) || !IsPositionInPlanar(p1.position, p2);

}

public override bool Equals(object obj)

{

if (obj == null)

{

return false;

}

if (obj is PlanarDescriptor p)

{